In large enterprises, responsible AI lives or dies in the gap between strategy decks and what actually ships. The WEF “Advancing Responsible AI Innovation” playbook gives a useful language for that gap - but it only becomes real when it’s translated into operating habits across product, risk, and technology teams. Here I want to spend some time thinking about how the nine plays in the framework can be used as a practical scaffold for enterprise AI programmes, rather than a poster on the wall.

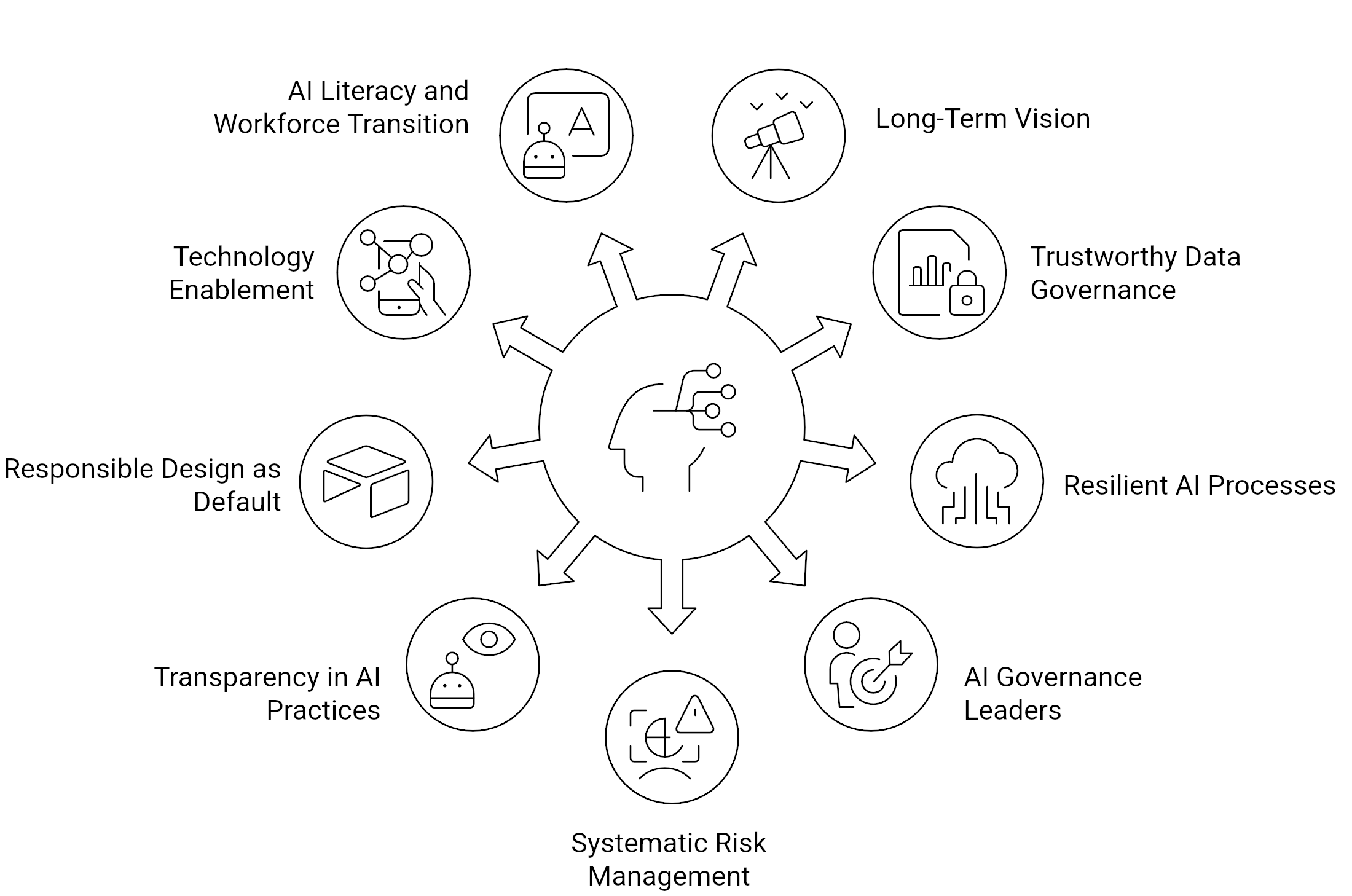

The below infographic pulls out the “key plays” from the WEF report - lets take some time and see how these might impact an organisation today.

Start with a real strategy, not an AI wishlist

We want to avoid AI roadmaps that are just lists of use cases with an ROI column tacked on the end. The first three plays in the framework push orgs to do something harder: connect AI directly to long term value, data foundations, and resilience. In practice, that means forcing a conversation at exec level that starts with “what behaviours do we want to change in this business?” or “what business problems really impact our customer and staff” rather than “which models should we buy?”. If that conversation does not happen, responsible AI defaults to “privacy plus a few model checks”.

In an enterprise context, “Lead with a long term, responsible AI strategy” is a good test of seriousness: is there a single, explicit AI vision that links commercial outcomes, risk appetite, and how responsibility is measured? “Unlock AI innovation with trustworthy data governance” is the follow on: if the data estate is still siloed, undocumented, and full of one off extracts, you are not blocked by AI capability, you are blocked by data hygiene. “Design resilient processes” is the unglamorous part: how will this organisation respond when a model fails in production, a regulator changes the rules, or a third party model provider deprecates an API? Heaven forbid a business critical system is developed within a citizen developer project - leading to drop in support and a mess that is costly to clean.

Governance that does more than say “no”

The second dimension is where most enterprises either get stuck or go overboard. The playbook calls for appointed AI governance leaders, systemic risk management, and transparency around incidents and practices. That sounds obvious, but the implementation pattern matters: centralised gatekeepers kill momentum; total federation leads to AI sprawl.

For a large organisation, “Appoint and incentivize AI governance leaders” should look like a named senior owner (not an unpaid side‑of‑desk role) plus a cross functional body spanning risk, legal, security, architecture, and business units. “Adopt a systematic, systemic and context specific approach to risk” means mapping one of the big frameworks (NIST AI RMF, ISO, etc.) to your own controls, and then embedding those controls into your existing SDLC, procurement, and change processes instead of inventing yet another parallel workflow.

“Provide transparency into responsible AI practices” is where many programmes could fall down: maintaining an AI inventory, a risk register, and defined incident types with playbooks is dull work, but it is what separates “we hope it’s fine” from a real assurance model.

This is analogous to working with any hazard. Business struggle when the answer is “No - until you have proven it is safe” - the right approach here is a pragmatic one “Yes - and this is how we will do it safely”. This is a minor switch in thinking and culture but one that has profound impact on how an Org moves.

Making responsible design the default path of least resistance

The third dimension is where this becomes tangible for teams building and using systems. The framework’s final plays focus on responsible‑by‑default design, technology enablement, and workforce literacy and transition. If those sound like HR and engineering problems, that’s because they are - this is where central governance has to become an enabler rather than an auditor.

“Drive AI innovations with responsible design as the default” is an invitation to bake checks into the design toolkit: patterns for human‑in‑the‑loop, guidelines for acceptable automation boundaries, and standard templates for documenting purpose, data, and limits of each system.

“Scale responsible AI with technology enablement” is about using the stack to your advantage: central monitoring for drift, common guardrail services, policy‑as‑code for who can call which models, and “kill switches” for higher‑risk systems. “Increase responsible AI literacy and workforce transition opportunities” is the cultural part: targeted training for different personas, and realistic plans for reskilling and redeploying people whose tasks are being automated, not just a slide about “augmented workers”.

We should also be aware that much innovation and development will happen “out in the trenches” and indeed, my feeling is, this is where the value for an org really lies. You need to support those individuals - not make them go round your controls.

Using the nine plays as an enterprise checklist

Taken together, the nine plays are less a linear roadmap and more a checklist you can use to stress‑test your own AI programme. A practical pattern for an enterprise is:

-

Use Plays 1–3 to align AI with corporate strategy, data architecture, and resilience planning, and write this down in a single, accessible AI strategy document.

-

Use Plays 4–6 to define who owns what, how risk is assessed, and how the organisation will talk about incidents and progress, internally and externally.

-

Use Plays 7–9 to shape the developer experience, platform capabilities, and learning paths so that “doing the responsible thing” is the easiest way to ship and operate AI systems - externally and internally.

Most organisations will already have fragments of this in place under different labels (data governance councils, model risk committees, security standards).

The value of the WEF framework is that it gives you a coherent, externally recognisable structure to connect them. As is so often the case - having a shared language you can use with regulators, partners, and your own teams is a value enabler and accelerator.